Newsletter Updates for December 2013

Hard to believe it’s been since AMPCamp 3 in August that I’ve had a editor buffer open, collecting notes to write up… New Years Resolutions include writing a newsletter on a monthly basis!

AMPCamp 3 was a big success: over 200 people attended a two-day marathon of hands-on work with the Berkeley Stack. Spark Summit doubled that attendance, and the upcoming Spark Summit 2014 this summer is expected to double again. We had Mesos talks at each, got to meet lots of interesting people in the community.

Travels this summer and fall took me through Boulder, Chicago, NYC, then back to the Bay Area just in time for the Mesos Townhall. Dave Lester wrote up an excellent summary of the #Townhall @Twitter.

Hands down, my favorite event was the Titan workshop in Santa Clara by Matthias Broechler. Our course sold out to capacity – even during an impossible commute of the recent BART strike. Companies sent engineering teams for a deep dive into Titan and graph queries at scale. I’ve never learned so much about both HBase and Cassandra in any other forum: Matthias led a tour de force through systems engineering insights. The magic in its vertex and edge representations and efficient denormalization at scale. Given that you probably have data in HBase or Cassandra already, and that your use cases are most likely graphs anyway, the Titan abstraction atop provides an excellent optimization. Highly recommended.

Strata

Strata NY in October was huge. The conf has grown so much that it must change venues next year – but what a rich environment. I couldn’t walk 5 meters without getting pulled into an incredible discussion. Bob Grossman’s talk Adversarial Analytics 101 was a big eye-opener, articulating what many of us have grappled with over the years – without language to convey issues/concerns to stakeholders.

A talk from Spotify reinforced an oft-repeated theme: some of the most costly issues with Hadoop at scale often involve mis-matches between the Java code and the underlying OS. For example, Spotify traced catastrophic NameNode failures – jobs which ran fine for everybody except for one particular user – to Hadoop’s naive use of Linux group ID. In other words… wait for it… Hadoop is not an OS! Datascope Analytics and IDEO teamed up on Data Design for Chicago Energy – don’t miss the part about their Curry Crawl :) Also, MapR hosted a cocktail party in the penthouse that was an international who’s who of Big Data.

I look forward to being involved with Strata confs – Beau Cronin and I will be co-hosts for the Hadoop and Beyond track at Strata SC in Feb. Flo Leibert and I will be teaching a 3-hour Mesos tutorial. Secret handshakes for discount codes are in effect :) Also, Strata EU next year will be held in Barcelona. Looking forward to that!

Upstairs, Downstairs

Criticisms heard at many conferences involves an “upstairs, downstairs” effect. Upstairs: speakers give interesting talks, perhaps about use cases similar to what you need, but often about obscure cases that are far afield from your needs. Downstairs: vendors push products that address the “nuts and bolts” of cluster computing, and emphasize vague nuances about how they differentiate. Not so much about use cases. People from Enterprise IT organizations walk away shaking their heads: rocket science upstairs, 150 lookalike Big Data vendors downstairs… perhaps the best conversations are to be found on the escalators in-between. Okay, we need to emphasize use cases in the conference content – but how about the content in company experiences?

For the past 4–5 years or so, we’ve been seeing this evolve. Many companies scramble to adopt a Big Data practice based on Hadoop – their “journey”, to co-opt the marketing lingo du jour – without intended use cases. One might call this Hadoop-as-a-Goal. WSJ recently ran an excellent article by Scott Adams, creator of Dilbert, which one can paraphrase as “Goals are for losers.” Adams points out that establishing “goals” is a fast way to set yourself up for failure, while cultivating a “system” is a recipe for success. I couldn’t agree more. It cuts to the heart of the problem about Hadoop-as-a-Goal.

Let me ask: Do you have a system in place to leverage your data at scale? Or do you have a strategy based on what the vendors are providing, punctuated with a goal of converting those tools into a practice?

Stated another way, linear thinkers tend to set goals and flourish during a boom, when markets are steady and heading up. In contrast, I have a hunch that we are in the midst of experiencing years of significant disruptions, per market sector. Much of the disruption is fueled by leveraging data at scale. Non-linear thinkers tend to flourish in those conditions. Leading firms, e.g., Google and Amazon, have consistently flourished during down markets, thriving with approaches (systems) which seemed counter-intuitive to prevailing wisdom (goals).

I get to meet lots of people asking for advice about Big Data, and get to compare notes within lots of different organizations. One observation holds: those who prefer to talk about their favored tools… generally don’t get far in this game; while those who talk about their use cases (over tooling) are generally the ones succeeding. That observation dove-tails with the criticisms about conferences. Focus on having a system, not a set of goals.

Intro to Machine Learning

At the recent Big Data TechCon I did a short course called “Intro to Machine Learning”. Many thanks for the feedback and thoughtful comments. We’re expanding this into a full-day ML workshop through Global Data Geeks – first in Austin on Jan 10, followed soon after in Seattle on Jan 27.

Part of the content for this new ML workshop focuses on history. The origins of Machine Learning date back to the 1920s with the advent of first-order cybernetics, and we follow the emerging themes into contemporary times. A few kind souls came up after the talk, mentioning how that history stuff seemed like a waste of time at first, but then became quite interesting. I really helps establish context and see a bigger picture for an arguably complex topic.

Part of the content focuses on business use cases and team process. There are plenty of people doing excellent Machine Learning MOOCs: Andrew Ng, Pedro Dominguez, et al. I recommend those. However, this new ML workshop attempts to complement what those MOOCs tend to miss: how to put the algorithms to work into a business context. Have a system, not a set of goals.

Monoids, Building Blocks, and Exelixi

Sometimes, other authors beat me to the punch by articulating what I’ve been struggling to put into words. Sometimes they do a fantastic job of that. One recent case is the highly recommended article The Log: What every software engineer should know about real-time data’s unifying abstraction by Jay Kreps on the LinkedIn Engineering blog. I’ll call attention to the section titled “Part Four: System Building”, about building blocks for distributed systems. Jay and I perhaps disagree about Java as a priority, but I’ll bank on his words there in just about every other aspect. Jeff Dean @Google gave an excellent talk at ACM recently in a related theme, called Taming Latency Variability and Scaling Deep Learning. Also very highly recommended!

The building blocks have emerged. Once upon a time, there was an exceptionally popular OS called System 360, and business ran on that. IBM powned the world, so to speak. Eventually, Unix emerged as an extremely powerful contender – and why? Because Unix provided building blocks. I’ll contend that if the statement “Hadoop is an OS” has any basis in reality, then it’s more analogous to System 360 – at least for now. However, the “Unix phenomenon” equivalent for distributed systems is emerging now, and that will likely be a matter of open source building blocks, not a monolithic platform.

I’ll be focusing on this “Building Blocks” theme in upcoming meetup talks, workshops, interviews with notable people in the field – plus at Data Day Texason Jan 11, in an O’Reilly webcast on Jan 24 about running Spark on Mesos, and at my Strata SC talk about Mesos as an SDK for building distributed frameworks. See you there.

Another recent case of an excellent author beating me to the punch is the paper Monoidify! Monoids as a Design Principle for Efficient MapReduce Algorithms by Jimmy Lin at U Maryland and @Twitter. Highly recommended!

This paper provides the punchline for MapReduce. One of the odd aspects of teaching MapReduce to large numbers of people is to watch their reactions to the canonical WordCount example … Most people think of counting “words” in SQL terms – in other words, using GROUP BY and COUNT() to solve the problem quite simply. In that context the MapReduce example at first looks totally bassackwards. Even so, the SQL approach doesn’t scale well, while the MapReduce approach does. What the MapReduce approach fails to clarify is that it represents a gross oversimplification of something which is truly elegant and powerful: a barrier pattern applied to using monoids. Once a person begins to understand how all the WordCount.emit(token, 1) quackery of a MapReduce example is really just a good use of monoids, and that the “map” and “reduce” phases are examples of barriers, then this whole business of parallel processing on a cluster begins to click!

To paraphrase Jimmy, “Okay, what have we done to make this particular algorithm work? The answer is that we’ve created a monoid out of the intermediate value!” Truer words were seldom spoken.

Putting on my O’Reilly author hat, I’ve really wanted to show a simple example of programming with Apache Mesos. Python seemed like the best context, since there are lots of PyData use cases but there aren’t a lot of distributed framework SDKs yet for Python – though, keep an eye on Blaze…

So, I wrote up a Mesos tutorial about this recently as a GitHub project, partly for Strata content, and partly for industry use cases. The project is called Exelixi, named after the Greek word for “progress” or “evolution”. By default, Exelixi runs genetic algorithms at scale, atop a Mesos cluster. In the examples, we show Exelixi launching atop an Elastic Mesos cluster on Amazon AWS.

Even so, Exelixi is not tied to genetic algorithms or genetic programs. It can run distributed workflows on a Mesos cluster, by substituting one Python class at the command line. The distributed framework implements a barrier pattern which gets orchestrated across the cluster using REST endpoints. Consequently, it is capable of performing MapReduce plus a much larger class of distributed computing that requires a bit of messaging – which Hadoop and Spark don’t handle well. Python provides quite a rich set of packages for scientific computing, so I’m hoping this new open source project gets some use at scale. Moreover, it provides an instructional tool: showing how to write a distributed framework in roughly 300 lines of Python. You’ll even find a monoid used as a way to optimize GAs and GPs at scale. I could go on more about use of gevent coroutines for high-performance synchronization, about the use of a hash ring, how Exelixi leverages SHA–3 digests and a Trie data structure – but I’ll save that for some upcoming meetup talks.

The implications of building blocks for distributed systems? Not unlike the notion of interchangeable parts – and gosh, that was a game-changer for industry, speaking of “factory patterns”. Brace yourselves for much the same as we “retool” the industrial plant across all sectors to leverage several orders of magnitude higher data rates.

There are further implications… If you look at how Twitter OSS has retooled its cluster computing based on open source projects such as Mesos and Summingbird – again, with lots of those monoids wandering about – an interesting hypothesis emerges. Note that much of the “heavy lifting” in ML boils down to the cost of running lots and lots of optimization via stochastic gradient descent. Google, LinkedIn, Facebook, and others fit that description. Looking a few more years ahead, one of the promises of quantum computing is to be able to knock down huge gradient descent problems in constant time. Engineering focus on leveraging monoids would certainly pay out a high ROI in that scenario.

Minecraft

Ah, but quantum computing … how far off is that? It’s still a matter of research, true. However, one might take a hint from a recent twist… The Google Quantum AI team released a Minecraft mod called qCraft, with the intent of identifying non-linear thinkers who are adept at manipulating quantum problems. Identifying them early. Those of you who have kiddos probably understand that 10-year-olds play Minecraft. So let’s do the math … some subset of these avid Minecraft players will become Google AI interns within less than a decade.

Talk about “Have a system”, indeed.

I’ll take a bet that the event horizon is more like 5 years. Which means that 5+ years from now, I’ll be hiring for engineers and scientists who’ve logged 5+ years of experience with graph databases, monoids, abstract algebra, sparse matrices, datacenter computing, probabilistic programming, etc. And Minecraft :) I will be passing on engineers who’ve spent the past 5+ years rolling up log files in Apache Pig. #justsaying

While we’re on the topic, check out my friend Mansour’s plugin on GitHub for Minecraft. The plugin visualizes the results of analyzing large-scale data using machine learning and Minecraft :) This turns the process into a kind of game. Hint: Mansour and I both have kiddos in that age range.

Events

I’ve been working with Global Data Geeks to interview notable people in the field. Most recently, we had excellent discussions with Davin Potts an expert in computer vision and Python-based supercomputing, and also with Michael Berthold, the lead on KNIME. On deck, we’ll have another interview coming soon with Brad Martin, an expert in commodity hardware for extremely secure cryptographic work for consumer use.

Other events coming up:

- Intro to Machine Learning workshop, Austin, Jan 10

- the amazing Data Day Texas 2014 conf, Austin, Jan 11

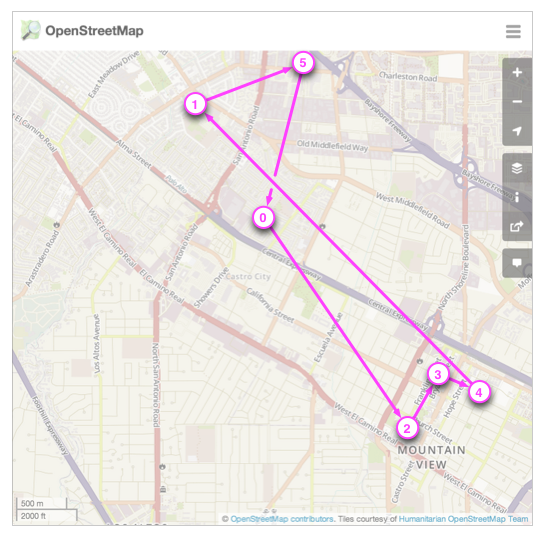

- KNIME training course, Mountain View, Jan 14

- free O’Reilly webcast: Spark on Mesos, Jan 24

- Intro to Machine Learning workshop, Seattle, Jan 27

- Py Data Science: Exelixi, Mesos, and Genetic Algorithms, Jan 28

- Strata SC conf, Santa Clara, Feb 11–13

- Big Analog Data™ Solutions Summit Austin, Apr 8

Also keep a watch for updates about a Twitter OSS conf coming up this Spring.

On that note, I am available for consulting. Also for weddings and parties. And I wish you and yours very Happy Holidays. See you in the New Year!