2012-06-22

2010-08-05

sample src + data for getting started on hadoop

Monday, 19 July 2010... I had a wonderful opportunity to present at the Silicon Valley Cloud Computing meetup, on the topic "Getting Started on Hadoop".

The talk showed examples of Hadoop Streaming, based on Python scripts

running on the AWS Elastic MapReduce service.

We started with a brief history of MapReduce, including the concepts leading up to the framework as well as open source projects and services which have followed. Then we stepped through the ubiquitous “WordCount” example (a “Hello World” for MapReduce), showing how Python and Hadoop Streaming make it simple to iterate and debug from a command line using Unix/Linux pipes.

Source code is available on GitHub and the oddly enough, the slide deck got an editor's pick that week on SlideShare.

The focus of the talk was to show text mining of the infamous Enron Email Dataset, which Infochimps.com and CMU make available. In that context, the example code creates an inverted index of keywords found in the email dataset, begins to semantic lexicon of "neighbor" keyword relationships, plus some data visualization and social graph analysis using R and Gephi.

Along with my presentation, Matthew Schumpert from Datameer gave a demo of their product, doing some similar kinds of text analysis.

Lots of people showed up, enough that the kind folks at Fenwick & West LLP grew concerned about running out of seats :) The audience asked several excellent questions and we had a lot of discussion after the talk. Todd Hoff wrote an article summarizing the talk and discussions, along with some great perspectives on High Scalability.

Admittedly, the Enron aspects of the talk were intended as somewhat of a teaser; my examples focused more on method than on results. I'd talked with several people who'd never seen how to write Python scripts for Hadoop Streaming, how to run Hadoop jobs on Elastic MapReduce, how to calculate some basic text analytics or produce simple data visualizations. Even so, if you want to see investigate the Enron data yourself, then checkout the code, download the data, and run this on AWS. There were some fun surprises to be found among the analytics results, which may be good to publish as a follow-up talk.

Many thanks to SVCC and our organizer Sebastian Stadil, our venue host Fenwick & West LL, and all who participated.

Posted by

Paco Nathan

at

08:09

0

comments

![]()

Tags: algorithms, aws, code, hadoop

2009-06-03

upcoming talks

I will be speaking at three events over the next two weeks. These talks will focus on how ShareThis leverages AWS and MapReduce, based on Cascading and AsterData as vendors, plus several open source projects including Bixo and Katta.

Tue 2009-06-09, 7:00pm-9:00pm

ScaleCamp

http://scalecamp.eventbrite.com/

Santa Clara Marriott

2700 Mission College Blvd

Santa Clara, CA 95054

Will discuss "How ShareThis mashes technologies in the cloud for Big Data analysis, leveraging AsterData nCluster, Cascading, Amazon Elastic MapReduce, and other AWS services in our system architecture."

This will be run in a Bar Camp format, along with DJ Patil, Ted Dunning, and other great speakers.

Wed 2009-06-10, 8:30am-8:30pm

Hadoop Summit 2009

http://developer.yahoo.com/events/hadoopsummit09/

Santa Clara Marriott

2700 Mission College Blvd

Santa Clara, CA 95054

Two talks: "Cascading for Data Insights at ShareThis: Syntax is for humans, API's are for software", as a developer talk, 2-3pm. Also, participating on the Amazon AWS panel discussion moderated by @jinman, about running Hadoop on EC2 and Elastic MapReduce, 6:00-6:30pm.

Last year's Hadoop Summit was a must-not-miss event, and I got lucky on timing and was able to tip-off friends at AWS to check it out... Amazon sponsored the lunch, and even though I did have to miss that event, AWS sent me awesome t-shirt schwag which I treasure to this day :)

Tue 2009-06-16, 1:00pm-7:00pm

Amazon AWS Start-Up Project

http://aws.amazon.com/startupproject/

Plug & Play Tech Center

440 N Wolfe Rd

Sunnyvale, CA 94085

Panel discussion, along with Netflix, SmugMug, and other AWS customers, to "help business and technical decision makers from from small start-ups to large enterprises learn how to be successful with Amazon Web Service."

Really looking forward to this. I was on the panel for AWS Start-Up Tour in Santa Monica in 2007. You may recall there were some fires... like, nearly all of LA and SD hillsides were up in flames. Even so, 250+ people braved their way through the smoke and ash to reach that night club on Santa Monica Blvd, and we had a great panel discussion. This year in Sunnyvale should be even better.

While I've got your attention... I've been spending a fair amount of time in Seattle and on the phone to Seattle, particularly at one reconditioned former medical center, and especially regarding the fine folks at Elastic MapReduce. While in Seattle, I got to hang out with 30+ other system architects, many incredible folks. M David, of course I'm talking about you! :) At this stage of my career, I rarely seem to get time to sit back with peers, and swap stories and ideas. Time well spent.

BTW, we've been working with EMR now for a while, and recently started using EMR for in production. One of those cases where I could not post a blog entry then, but definitely wanted to tell everybody! Now that the news has been long public, I'm actually too busy using it commercially to blog ;)

See you on twitter @pacoid

2009-02-10

westward ho

Some friends of mine live in the Westport area of Kansas City, across the street from a marker which notes the Oregon Trail, the Santa Fe Trail, and the California Trail. For many people in North America during the 19th century, their resolve to migrate west was tested there.

View Larger Map

So it is for us, with two little girls ready to travel. Our oldest is eager to live in hotels again, because they promise TVs, room service, and jacuzzi pools. She was done with KC as soon as she got her fill of fresh snow. Our youngest has been missing the ocean, especially the seals. She's asked, "When do we go home?" just about ever since we arrived in KC.

A woman from Kansas a few days ago made a few rather hostile remarks about how Californians feel about seals -- right after my little girl got through declaring how much she loves seals and whales.

Unforgivably callous. Intolerably ignorant. Not entirely unexpected. In other words, par for the course. I'm sorry to have to feel that kind of resignation, but it is entirely realistic. I bit my tongue to avoid making an offhand retort about "fly over zone". After all, parts of the Midwest are highly recommended.

Two bright spots have made these past 10 months worthwhile...

One is my team in the Analytics department at Adknowledge -- a.k.a., "Team Mendota". Those people have been a joy and and honor to work among. In addition to several contributions to R, Hadoop, AWS, RightScale, and points inbetween, we've made big strides in methods for having statisticians and developers working side-by-side on "Big Data" projects -- with tangible results. Frankly, I would have left much soon (supra 3 yr old tugging daddy's heartstrings) if it had not been for how much I appreciate this team. Flo, Chris, Nathan, Chun, Bill, Shan, Vicky, Margi, David, Gaurav -- I wish you all very well. My regret is that I did not get to work with you long enough.

The other is our collective friends and families in the area, met through our kids -- mostly because of their school, Global Montessori Academy. My wife has served on the board there. The teachers and just everyone involved have been amazing. Not sure quite how that place emerged here inside of KC, and I'd be hard-pressed to believe that anything much like it exists between Chicago and Austin... but GMA and the people associated will be missed.

I found myself thinking, many many times while living here in KC, "How can I teach my daughters about the principles in which I believe, if I cannot live them here?" GMA actually does represent those principles. Highly recommended.

For everyone else, see you back on the Left Coast!

2008-09-27

cloud computing - methodology notes

A couple companies ago, one of my mentors -- Jack Olson, author of Data Quality -- taught us team leaders to follow a formula for sizing software development groups. Of course this is simply a guidance, but it makes sense:

9:3:1 for dev/test/doc

In other words, a 3:1 ratio of developers to testers, and then a 9:1 ratio of developers to technical writers. Also figure in how a group that size (13) needs a manager/architect and some project management.

On the data quality side, Jack sent me out to talk with VPs at major firms -- people responsible for handling terabytes of customer data -- and showed me just how much the world does *not* rely on relational approaches for most data management.

Jack also put me on a plane to Russia, and put me in contact with a couple of firms -- in SPb and Moscow, respectively. I've gained close friends in Russia, as a result, and profound respect for the value of including Russian programmers on my teams.

A few years and a couple of companies later, I'm still building and leading software development teams, I'm still working with developers from Russia, I'm still staring at terabytes (moving up to petabytes), and I'm still learning about data quality issues.

One thing has changed, however. During the years in-between, Google demonstrated the value of building fault-tolerant, parallel processing frameworks atop commodity hardware -- e.g, MapReduce. Moreover, Hadoop has provided MapReduce capabilities as open source. Meanwhile, Amazon and now some other "cloud" vendors have provided cost-effective means for running thousands of cores and managing petabytes -- without having to sell one's soul to either IBM or Oracle. By I digress.

I started working with Amazon EC2 in August, 2006 -- fairly early in the scheme of things. A friend of mine had taken a job working with Amazon to document something new and strange, back in 2004. He'd asked me terribly odd questions, ostensibly seeking advice about potential use cases. When it came time to sign up, I jumped at the chance.

Over the past 2+ years I've learned a few tricks and have an update for Jack's tried-and-true ratios. Perhaps these ideas are old hat -- or merely scratching the surface or even out of whack -- at places which have been working with very large data sets and MapReduce techniques for years now. If so, my apologies in advance for thinking aloud. This just seems to capture a few truisms about engineering Big Data.

First off, let's talk about requirements. One thing you'll find with Big Data is that statistics govern the constraints of just about any given situation. For example, I've read articles by Googlers who describe using MapReduce to find simple stats to describe a *large* sample, and then build their software to satisfy those constraints. That's an example of statistics providing requirements. Especially if your sample is based on, say, 99% market share :)

In my department's practice, we have literally one row of cubes for our Statisticians, and across the department's center table there is another row of cubes for our Developers. We build recommendation systems and other kinds of predictive models at scale; when it comes to defining or refining algorithms that handle terabytes, we find that analytics make programming at scale possible. We follow a practice of having statisticians pull samples, visualize the data, run analysis on samples, develop models, find model parameters, etc., and then test their models on larger samples. Once we have selected good models and the parameters for them, those are handed over to the developers -- and to the business stakeholders and decisions makers. Those models + parameters + plots become our requirements, and part of the basis for our acceptance tests.

Given the sheer lack of cost-effective tools for running statistics packages at scale (SAS is not an option, R can't handle it yet) then we must work on analytics through samples. That may be changing, soon. But I digress.

Developers working at scale are a different commodity. My current employer has a habit of hiring mostly LAMP developers, people who've demonstrated proficiency with PHP and SQL. That's great for web apps, but hand the same people terabytes and they'll become lost. Or make a disastrous mess. I give a short quiz during every developer interview to insure that our candidates understand how a DHT works, how to recognize a good situation for using MapReduce, and whether they've got any sense for working with open source projects. Are they even in the ballpark? Most candidates fail.

I certainly don't look for one-size-fits all, either: some people are great working on system programming, which is crucial for Big Data, and others are more suited for working on algorithms. The latter is problematic, because algorithms at scale do not tend to work according to how logic might dictate. Precious little at scale works according to how logic might dictate. In fact, if I get a sense that a developer or candidate is too "left brained" and reliant on "logic", then I know already they'll be among the first to fail horribly with MapReduce. One cannot *think* their way through failure cases within a thousand cores running on terabytes. Data quality issues alone should be sufficient, but there are deeper forces involved. Anywho, one must *measure* their way through troubleshooting at scale -- which is another reason why statistics and statisticians need to be close at hand.

The trouble is that most commodity hardware is built to perform well within given sets of tolerance. If you restart your laptop a hundred times, it will probably work fine; however if you launch 100 virtual servers, some percentage will fail. Even among the ones that appear to run fine, there will be disk failures, network failures, memory glitches, etc., some of which do not become apparent until they've occurred many times over. So when you pump terabytes through a cluster of servers for hours and hours, some parts of your data is going to get mangled.

Which brings up another problem: unit tests run at scale don't provide a whole lot of meaning. Sure, it's great to have unit tests to validate that your latest modification and check-ins pass tests with small samples of data. Great. Even so, minor changes in code, even code that passes its unit test, can cascade into massive problems at scale. Testing at scale is problematic, because sometimes you simply cannot tell what went wrong unless you go chasing down several hundred different log files, each of which is many Gb. Even if results get produced, with Big Data you may not know much about the performance of results until they've had time to go through A/B testing. Depending on your operation, that may take days to evaluate correctly.

One trick is to have statisticians acting in what would otherwise be a "QA" role -- poking and prodding the results of large MapReduce jobs.

One other trick is to find the people talking up the use of scrum, XP, etc., and relocate those people to another continent. I use iterative methodology -- from a jumble of different sources, recombined for every new organization. However, when someone wants to sell me a "agile" product where unit tests adjudicate the project management, I show that person the door. Don't let it hit you on the way out. In terms of working with cloud-based architecture and Big Data, something new needs to be invented for methodology, something beyond "agile". I'll try to document what we've found to work, but I don't have answers beyond that.

Some key points:

Each statistician can prompt enough work for about three developers; it's a two-way street, because developers are generally helping analysts resolve system issues, pull or clean up data that's problematic, etc.

Statisticians work better in pairs or groups, not so well individually. In fact, I round up all the quantitative analysts in the company once each week for a "Secret Statisticians Meeting". We run the meeting like a grad seminar. Management and developers are not allowed, unless they have at least a degree in mathematics -- and even then they must prove their credentials by presenting and submitting to a review.

In any case, I try to assign a minimum of two statisticians to any one project, to get overlap of backgrounds and shared insights.

We use R, it's great for plots and visualizing data. I find that hiring statisticians who have the chops to produce good graphics and dashboards -- that's crucial. Stakeholders don't grasp an equation for how to derive variance, but they do grasp seeing their business realities depicted in a control chart or scatterplot. That communication is invaluable, on all sides. Hire statisticians who know how leverage R to produce great graphics.

Back to the developers, some are vital as systems programmers -- for scripting, troubleshooting, measuring, tweaking, etc. We use Python mostly for that. You won't be able to manage cloud resources without that talent. Another issue is that your traditional roles for system administrators, DBAs, etc., are not going to help much when it comes to troubleshooting an algorithm running in parallel on a thousand cores. They will tend to make remarks like "Why don't you just reboot it?" As if. So you're going to have to rely on your own developers to run operations. Those are your system programmers.

Other developers work at the application layer. Probably not the same people, but you might get lucky. Don't count on it. Algorithm work requires that a programmer can speak statistics, and there aren't many people who cross both worlds *and* write production quality code for Hadoop.

Another problem is documentation. Finding a tech writer to document a team working on Big Data would entail finding an empath. Besides, the statisticians generate a lot of documents, and the developers (and their automated tools) are great at generating tons of text too. Precious little of it dovetails, however. Of course we use a wiki to capture our documentation -- it has versioning, collaborative features, etc. -- and we also use internal blogs for "staging" our documentation, writing drafts, logging notes, etc. Even with all those nifty collab tools, it still takes an editor to keep a bunch of different authors and their texts on track -- *not* a tech writer, but an actual editor. Find one. They tend to be inexpensive compared with developers, and hence more valuable than anybody but the recruiters. But I digress.

Back to those team ratios from Jack Olson... I have a hunch that the following works better in Big Data:

2:3:3:1 for stats/sys/app/edit

A team of 9 needs a team leader/manager -- which, as I understand, might be called a "TL/M" at GOOG, a "2PT" at AMZN, etc. I favor having that person be hands-on, and deeply involved with integration work. Integration gives one a birds-eye view of which individual contributors are ahead or behind. Granted, that'd be heresy at most large firms which consider themselves adept at software engineering. Even so I find it to be a trend among successful small firms.

One other point... Working with Big Data, and especially in the case of working with cloud computing... the biggest risk involved and the most complex part of the architecture involves data loading. In most enterprise operations, data loading is taken quite seriously. If you're running dozens or hundreds (or thousands?) of servers in a Hadoop cluster, then take a tip from the large shops and get serious about how you manage your data. Have a data architect -- quite possibly, the aforementioned team leader/manager.

YMMV.

Posted by

Paco Nathan

at

22:09

1 comments

![]()

2008-08-26

hadoop in the cloud - patterns for automation

A very good use for Hadoop is to manage large "batch" jobs, such as log file analysis. A very good use for elastic server resources -- such as Amazon EC2, GoGrid, AppNexus, Flexiscale, etc. -- is to handle large resource needs which are periodic but not necessarily 24/7 requirements, such as large batch jobs.

Running a framework like Hadoop on elastic server resources seems natural. Fire up a few dozen servers, crunch the data, bring down the (relatively smaller) results, and you're done!

What also seems natural is the need to have important batch jobs automated. However, the mechanics of launching a Hadoop cluster on remote resources, monitoring for job completing, downloading results, etc., are far from being automated. At least, not from what we've been able to find in our research.

Corporate security policies come into play. For example, after a Hadoop cluster running for several hours to obtain results, your JobTracker out on EC2 may not be able to initiate a download back to your corporate data center. Policy restrictions may require that to be initiated from the other side.

There may be several steps involved in running a large Hadoop job. Having a VPN running between the corporate data center and the remote resources would be ideal, and allow for simple file transfers. Great, if you can swing it.

Another problem is time: part of the cost-effectiveness of this approach is to run the elastic resources only as long as you need them -- in other words, while the MR jobs are running. That may make the security policies and server automation difficult to manage.

One approach would be to use message queues on either side: in the data center, or in the cloud. The scripts which launch Hadoop could then synchronize with processes on your premises via queues. A queue poller on the corporate data center side could initiate data transfers, for example.

Would be very interested to hear how others are approach this issue.

Posted by

Paco Nathan

at

16:29

0

comments

![]()

2008-07-08

a methodology for cloud computing architecture - part 5

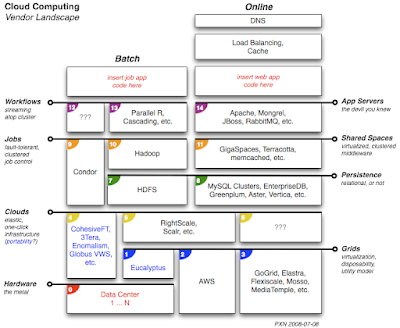

Diagram, redux.

Errata: fixed issues regarding 3Tera and CohesiveFT, both of which deserved better positioning in the stack than my cloudy thoughts had heretofore imagined.

Again, many thanks to vendors who are contacting me, and kudos in general to discussions on the "cloud-computing" group.

One thing jumps out from this diagram now: items 6 and 12 are placeholders. What vendors or projects might emerge to cover them? For example, might some enterprising soul rewrite portions of the Scalr project so that it can handle APIs for other vendors?

Posted by

Paco Nathan

at

17:22

0

comments

![]()

2008-07-07

a methodology for cloud computing architecture - part 4

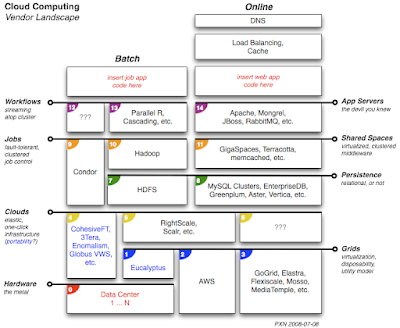

[Ed: the diagram shown below has been updated; see previous diagram and the discussion update.]

The diagram won't go away -

This time around, I'd like to take a few notes from both the Globus VWS and the GoGrid / Flexiscale discussions on the "cloud-computing" email list...

Suppose you just happened to have a bunch of hardware, and wanted to leverage that as a grid – in other words, as "disposable infrastructure" to use the phrasing from 3Tera, or perhaps "managed virtualization". From what I've read in the respective web sites, there are a few ways to approach that. 3Tera has AppLogic, and also there is the Eucalyptus project which exposes a layer looking like EC2.

At a bit higher level of abstraction, there are offerings from CohesiveFT and Enomalism which provide similar "grid" atop your metal, but also manage your images for your cloud-based apps on EC2. In other words, those allow for portability of cloud-based apps – whether on your own metal or on metal leased from AWS.

Slicing this stack diagonally in another direction, there are RightScale and Scalr which manage scaling your cloud-based app on EC2 – and monitor it. The former in particular provides excellent features for scripting and monitoring apps. Flexiscale provides similar features for apps run on their cloud (in UK – hope I'm getting those facts straight).

Traveling up the stack on the batch side, Globus provides open source software to run jobs on your own metal, or on EC2 – or on scientific grids, if you qualify.

Meanwhile, GigaSpaces has announced joint projects with both RightScale (for EC2) and CohesiveFT (for your own metal + EC2) to run spaces for SBA – in other words, virtualized middleware. That appears to take the integration of cloud portability and cloud management to another level.

The diagram still needs work along the Hadoop / HDFS portion of the stack – which was its original intention. Obviously there is no distributed file system when talking about Globus, Condor, etc.

Overall, it's quite nice to see this evolution among vendors and product offerings. Many thanks to the vendors who've been taking me on guided tours of their wares.

Posted by

Paco Nathan

at

20:25

2

comments

![]()

2008-07-05

a methodology for cloud computing architecture - part 3

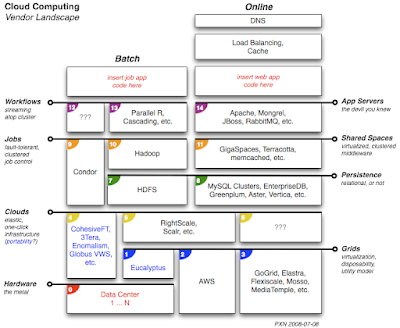

[Ed: the diagram shown below has been updated; see previous diagram and the discussion update.]

One more iteration on that diagram.

This time we'll represent some structured approaches running atop a Hadoop cluster. Parallel R being one that addresses our department's needs the most directly...

FWIW, I'm more interested in what can be accomplished with batch jobs and non-relational database approaches -- on Linux-based commodity hardware. Probably an occupational hazard for working with very large data sets and fast turn-around time requirements, where fault-tolerance seems to be what cripples very large-scale relational approaches, in practice. In other words, if your solution to handling very large data requires an SQL query, I might not take the call. At least not for analytics.

Having said that, we still have a ton of transaction / web-based front-end work, which was written for LAMP and still fits relational better.

Posted by

Paco Nathan

at

20:06

0

comments

![]()

2008-06-30

a methodology for cloud computing architecture - part 2

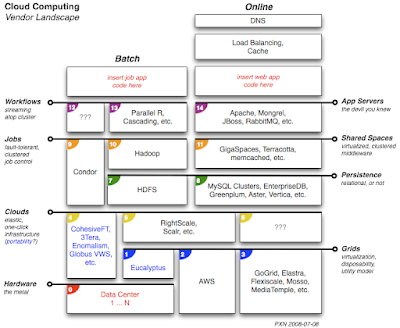

[Ed: the diagram shown below has been updated; see previous diagram and the discussion update.]

Thanks to many people who gave their feedback – publicly and privately – about the diagram in my previous post. Apologies to RightScale and others for inverting their placement. I definitely want to recommend John Willis for an excellent overview of the vendors in this space.

Will keep attempting to articulate this until we get it right :)

- I really wanted to avoid virtualization. However, it looks like that's a foregone conclusion.

- I prefer to place the cloud layer atop the grid layer. Several good resources disagree on that; the two terms flip-flop.

- Our "3x" sidebar about TCO and AWS was quite interesting. Would like to hear more from others about how they estimate that metric.

Mostly, I'm interested in software solutions which can place a "cloud manager" layer atop our own equipment – so that we could pull apps from one cloud to another depending on needs. Labeled "5" in the new diagram.

Comments are welcomed.

Posted by

Paco Nathan

at

21:08

1 comments

![]()

2008-06-29

a methodology for cloud computing architecture

[Ed: the diagram shown below has been updated; see previous diagram and the discussion update.]

I got asked to join to a system architecture review recently. The subject was a plan for data center redundancy. The organization had one data center, and included some relatively big iron:

- a large storage system appliance, with high capex (its name starts with an "N", let you guess)

- a large RDBMS with expensive licensing (its name starts with an "O", let you guess)

- a large data warehouse appliance, with GINORMOUS capex, expensive maintenance fees, and far too much downtime (its name includes a "Z", let you guess)

- plus several hundred Linux servers on commodity hardware running a popular virtualization product (its name includes an "M" and a "W", let you guess)

Trouble is, most of those Ginormous capexii species of products – particularly the noisome Ginormous capexii opexii subspecies – were already heavily overloaded by the organization. That makes the "50% capacity" load balancing story hard to believe. Otherwise it would have been a good approach, circa 2005. These days there are better ways to approach the problem...

After reviewing the applications it became clear that the data management needed help – probably before any data centers got replicated. One immediate issue concerned unwinding dependencies on their big iron data management, notably the large RDBMS and DW appliance. Both were abused at the application level. One should use an RDBMS as, say, a backing store for web services that require transactions (not as a log aggregator), or for reporting. One should use a DW for storing operational data which isn't quite ready to archive (not for cluster analysis).

We followed an approach: peel off single applications out of that tangled mess first. Some could run on MySQL. Others were periodic batch jobs (read: large merge sorts) which did not require complex joins, and could be refactored as Hadoop jobs more efficiently. Perhaps the more transactional parts would stay in the RDBMS. By the end of the review, we'd tossed the DW appliance, cut RDBMS load substantially, and started to view the filer appliance as a secondary storage grid.

Another approach I suggested was to think of cloud computing as the "duplicating 50% capacity" argument taken to the next level. Extending our "peel one off" approach, determine which applications could run on a cloud – without much concern about "where" the cloud ran other than the fact that we could move required data to it. Certainly most of the Hadoop jobs fit that description. So did many of the MySQL-based transactions. Those clouds could migrate to different grids, depending on time, costs, or failover emergencies.

One major cost is data loading across grids. However, the organization gains by duplicating its most critical data onto different grids and has a business requirement to do so.

In terms of grids, the organization had been using AWS for some work, but had fallen into a common trap of thinking "Us (data center) versus Them (AWS)", arguing about TCO, vendor lock-in, etc. Sysadmins calculated a ratio of approximately 3x cost at AWS – if you don't take scalability into consideration. In other words, if a server needs to run more than 8 hours per day, it starts looking cheaper to run on your own premises than to run it on EC2.

I agree with the ratio; it's strikingly similar to the 3x markup you find buying a bottle of good wine at any decent restaurant. However, scalability is a crucial matter. Scaling up as a business grows (or down, as it contracts) is the vital lesson of Internet-based system architecture. Also, the capacity to scale up failover resources rapidly in the event of an emergency (data center lands under an F4 tornado) is much more compelling than TCO.

In the diagram shown above, I try to show that AWS is a vital resource, but not the only vendor involved. Far from that, AWS provides services for accessing really valuable metal; they tend to stay away from software, when it comes to cloud computing. Plenty of other players have begun to crowd that market already.

I also try to separate application requirements into batch and online categories. Some data crunching work can be scheduled as batch jobs. Other applications require a 24/7 presence but also need to scale up or down based on demand.

What the diagram doesn't show are points where message queues fit – such as Amazon's SQS or the open source AMPQ implementations. That would require more of a cross-section perspective, and, well, may come later if I get around to a 3D view. Frankly, I'd rather redo the illustration inside Transmutable, because that level of documentation for architectures belongs in real architectural tools such as 3D spaces. But I digress.

To me, there are three aspects of the landscape which have the most interesting emerging products and vendors currently:

- data management as service-in-the-cloud, particularly the non-relational databases (read: BigTable envy)

- job control as service-in-the-cloud, particularly Hadoop and Condor (read: MapReduce+GFS envy, but strikingly familiar to mainframe issues)

- cloud service providing the cloud (where I'd be investing, if I could right now)

To abstract and summarize some of the methodology points we developed during this system architecture review:

- Peel off the applications individually, to detangle the appliance mess (use case analysis).

- Categorize applications as batch, online, heavy transactional, or reporting – where the former two indicate likely cloud apps.

- Think of cloud computing as a way to load balance your application demands across different grids of available resources.

- Slide the clouds across different grids depending on costs, scheduling needs, or failover capacity.

- Take the hit to replicate critical data across different grids, to have it ready for a cutover within minutes; that's less expensive than buying insurance.

- Run your own multiple data centers as internal grids, but have additional grid resources ready for handling elastic demands (which you already have, in quantity).

- Reassure your DBAs and sysadmins that their roles are not diminished due to cloud computing, and instead become more interesting – with hopefully a few major headaches resolved.

My diagram is a first pass, drafted the night after our kids refused to sleep because of a thunderstorm... subject to many corrections at least a few revisions. The vendors shown on fit approximately, circa mid-2008. Obviously some offer overlapping services into other areas. Most vendors mentioned are linked in my public bookmark: http://del.icio.us/ceteri/grid

Comments are encouraged. Haven't seen this kind of "vendor landscape" diagram elsewhere yet. If you spot one in the wild elsewheres, please let us know.

Posted by

Paco Nathan

at

08:22

6

comments

![]()

2008-03-12

hadoop, part 3: graph-based nlp

I've been evaluating graph-based methods for language analytics. At my previous employer, we used statistical parsing and non-parametric methods as a basis for semantic analysis of texts. In other words, use a web crawler to grab some text content from a web page, run it through a natural language parser (after a wee bit o' clean-up), then use the resulting parse trees to extract meaning from the text. Typical stages required for parsing include segmentation, tokenizing, tagging, chunking, and possibly some post-processing after all that. Our use case was to identify noun phrases in the text, which is called NP chunking. Baseline metrics run at about 80% for success using that kind of approach.

More recent work on graph-based methods allows for improved results with considerably faster throughput rates. We did not apply this approach at HeadCase, but my tests subsequently have shown better than an order of magnitude performance gain, in terms of CPU time, when running side-by-side tests on the same processor and data set. This approach, based on link analysis within a graph, eliminates the need for chunking. Instead it iterates on a difference equation, which tends to converge rather quickly (depending on the size of the graph).

As an example, I took input text from an encyclopedia article about Java Island found online. It has 3 paragraphs, 16 sentences, 331 words, 2053 bytes. Using a popular, open source NLP library, a parser-based approach for identifying noun phrases takes an average of 8341 milliseconds on my MacBook laptop, while the same task using the same libraries with a graph-based approach takes an average of 257 milliseconds. That's a factor 32x speed-up.

To be fair, some researchers have used tagging followed by regular expressions to perform fairly good NP chunking. That works, quickly, but not so well. I needed better results.

The following table illustrates the success of the two approaches, compared with the top 27 key phrases selected by a human editor:

While the graph-based approach has a higher error rate, in terms of recognizing actual noun phrases, it results in comparable F-measure precision rates – with single digital relative error between the two approaches. That is accounted for by the fact that each step in a stochastic parser introduces some error and ambiguity because of variants, but a relatively simple use of dynamic programming can be applied to prune variants and reduce error overall. In contrast, a graph-based analysis tends to identify better high-value targets for key phrases – and more importantly, it tends to rank true positives higher than the noise, which is not something that a parser-based approach can provide. If there is post-processing involved for contextual analysis, the noun phrases produced by a graph-based approach appear superior – and substantially more performant.

Another point to consider, when analyzing large amounts of text, is that graph-based analysis is relatively simple and efficient to implement using a map-reduce framework such as Hadoop.

The trick is… how to represent a stream of morphemes in natural language as a graph.

Posted by

Paco Nathan

at

19:17

0

comments

![]()

Tags: algorithms, grid, hadoop, linguistics

2008-02-20

hadoop, part 2: jyte cred graph

Following from Part 1 in the series, this article takes a look at an initial application in Hadoop, using a simplified PageRank algorithm with graph data from jyte.com to illustrate a gentle introduction to distributed computing. The really cool part is that this runs quickly on my MacBook laptop, the same Java code can run and scale without modification on Amazon AWS, on an IBM mainframe, and (with minor changes) in the clouds at Yahoo and Google. Source code is available as open source on Google Code at:

The social networking system jyte.com provides means for using OpenID as primary identification for participants – at least one is required for login. Participants then post "claims" on which others vote. They can also give "cred" to other participants, citing specific areas of credibility with tags. The contacts API provides interesting means for managing "social whitelisting".

Okay, that was a terribly dry, academic description, but the bottom line is that jyte is fun. I started using it shortly after its public launch in early 2007. You can find a lot more info on their wiki. Some of the jyte data gets published via their API page – essentially the "cred" graph. Others have been working with that data outside of jyte.com, notably Jim Snow's work on reputation metrics.

Looking at the "cred" graph, it follows a form suitable for link analysis such as the PageRank used by Google. In other words, the cred given by one jyte user to another resembles how web pages link to other web pages. Therefore a PageRank metric can be determined, as Wikipedia describes, "based upon quantity of inbound links as well as importance of the page providing the link." Note that this application uses a simplified PageRank, i.e., no damping term in the difference equation.

Taking the jyte cred graph, inverting its OpenID mapping index, then calculating a PageRank algorithm seemed like a reasonably-sized project. There are approximately 1K nodes in the graph (currently) which provides enough data to make the problem and its analysis interesting, but not so much to make it unwieldy. Sounds like a great first app for Hadoop.

The data as downloaded from jyte.com has a caveat: it has been GZIP'ed twice. Once you get it inflated and loaded into the HDFS distributed file system, as "input", the TSV text file line format looks like:

from_openid to_openid csv cred tags

Pass 1 in Hadoop is a job which during its "map" phase takes the downloaded data from a local file, and during the "reduce" phase it aggregates the one-to-many relation of OpenID URIs which give cred to other OpenID URIs. See in the Java source code the method configPass1() in the org.ceteri.JyteRank class, and also the inner classes MapFrom2To and RedFrom2To. The resulting "from2to" TSV text data line format looks like:

from_openid tsv list of to_openids

Pass 2 is a very simple job which initializes rank values for each OpenID URI in the graph – starting simply with a value of 1. We'll iterate to change that, later. See the method configPass2() and also the inner classes MapInit and RedInit. The resulting "prevrank" TSV text data line format looks like:

from_openid rank

Pass 3 performs a join of "from2to" data with "prevrank" data, then expands that into individual terms in the PageRank summation, emitting a partial calculation for each OpenID URI. See the method configPass3() and also the inner classes MapExpand and RedExpand. The resulting "elemrank" TSV text data line format looks like:

to_openid rank_term

Pass 4 aggregates the "elemrank" data to sum the terms for each OpenID URI in the graph, reducing them to a rank metric for each. See the method configPass4() and also the inner classes MapSum and RedSum. The resulting "thisrank" TSV text data line format looks like:

to_openid rank

The trick with PageRank is that it uses a difference equation to represent the equilibrium values in a network, iterating through sources and sinks. So the calculation generally needs to iterate before it converges to a reasonably good approximation of the solution at equilibrium. In other words, after completing Pass 4, the code swaps "thisrank" in place of "prevrank" and then iterates on Pass 3 and Pass 4. Rinse, later, repeat – until the difference between two successive iterations produces a relatively small error.

Whereas Google allegedly runs their PageRank algorithm through thousands of iterations, running for several hours each day, I have a hunch that jyte.com does not run many iterations to determine their cred points. Stabbing blindly, I found that using 9 iterations seems to produce a close correlation to the actual values listed as jyte.com cred points. A linear regression can then be used to approximate the actual values:

My analysis here is quite rough, and the code is far from optimal. For example, I'm handling data in-between the map and reduce phases simply as TSV text. There could be much improvement by using subclassed InputFormat and OutputFormat definitions for my own data formats. Even so, the results look pretty good: not bad for one afternoon's work, eh?

I have big reservations about PageRank for web pages, since it assumes that an equilibrium state effectively models attention given to web pages. PageRank provides a mathematical model for a kind of "coin toss", where a user looking at a browser either chooses to navigate to some random URL (with probability 0.15) or they follow one of the links on the current page (with probability 0.85). In practice that implies how some important web pages will experience substantial latency before PageRank begins to "notice" them, since PageRank must wait for (a) other pages to link to the new page, and (b) their web crawlers to visit and analyze those changes. That process can take several days.

A more realistic model of human behavior might take into account that – contrary to Google PageRank assumptions – people do not navigate to some random URL because it is in their bookmarks or is their individual home page. That might have been the general case in 1998, but these days I get my fix of random URLs because of email, IM, RSS, etc., all pushing them out to me. In other words, there is an implied economy of attention (assuming I only look at one browser window at a time) which has drivers that are external to links in the World Wide Web. In lieu of using equilibrium models, the link analysis might consider some URLs as "pumps" and then take a far-from-equilibrium approach.

Certainly, a site such as CNN.com demonstrates that level of network effects, and news events function as attention drivers.

But I digress.

A simplified PageRank analysis based on an equilibrium model appears to work fine enough to approximate jyte.com cred points. It also illustrates how to program within the Hadoop framework. I have ulterior motives for getting jyte cred graph data into Hadoop – which we'll examine in the next part of this series.

Posted by

Paco Nathan

at

11:35

0

comments

![]()

Tags: algorithms, autopoiesis, digerati, grid, hadoop